Research

This page is incomplete and is being updated. :-)

|

|

Active Authentication on Mobile Devices

Rama Chellappa,

Larry Davis,

Vishal Patel,

Vlad I. Morariu

As mobile devices are becoming more ubiquitous, it becomes important to

verify the identity of the user during all interactions rather than just

at login time. Active authentication (AA) systems deal with this issue by

continuously monitoring smartphone sensors after the initial access has

been granted. However, AA remains an unsolved problem specially for

smartphones.

This project aims to develop more robust algorithms for AA on smartphones

using various modalities such as face, touch gestures and other physiological

and behavioral traits.

Downloads

| Mobile Face Video Dataset:

(Download link available only by email)

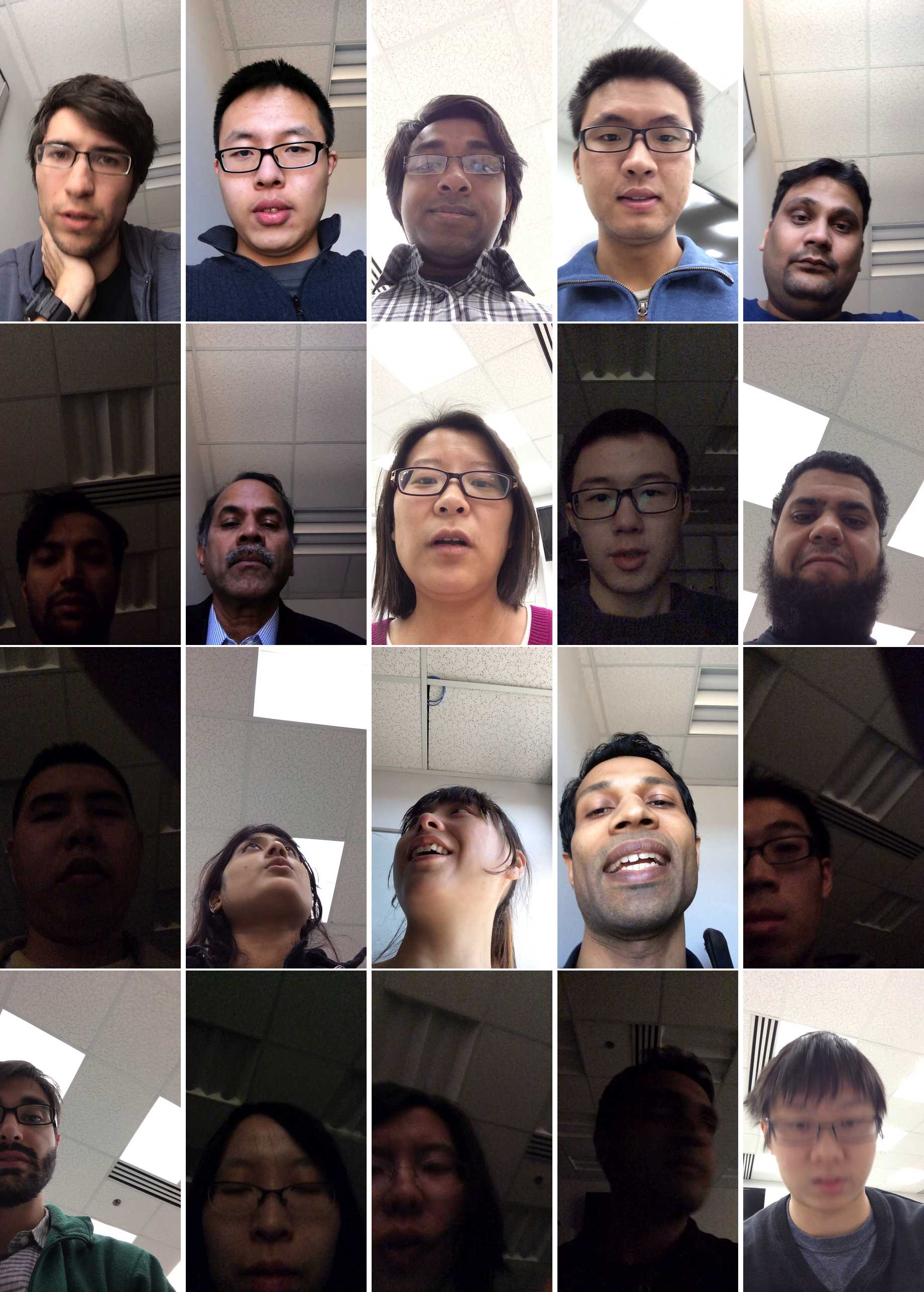

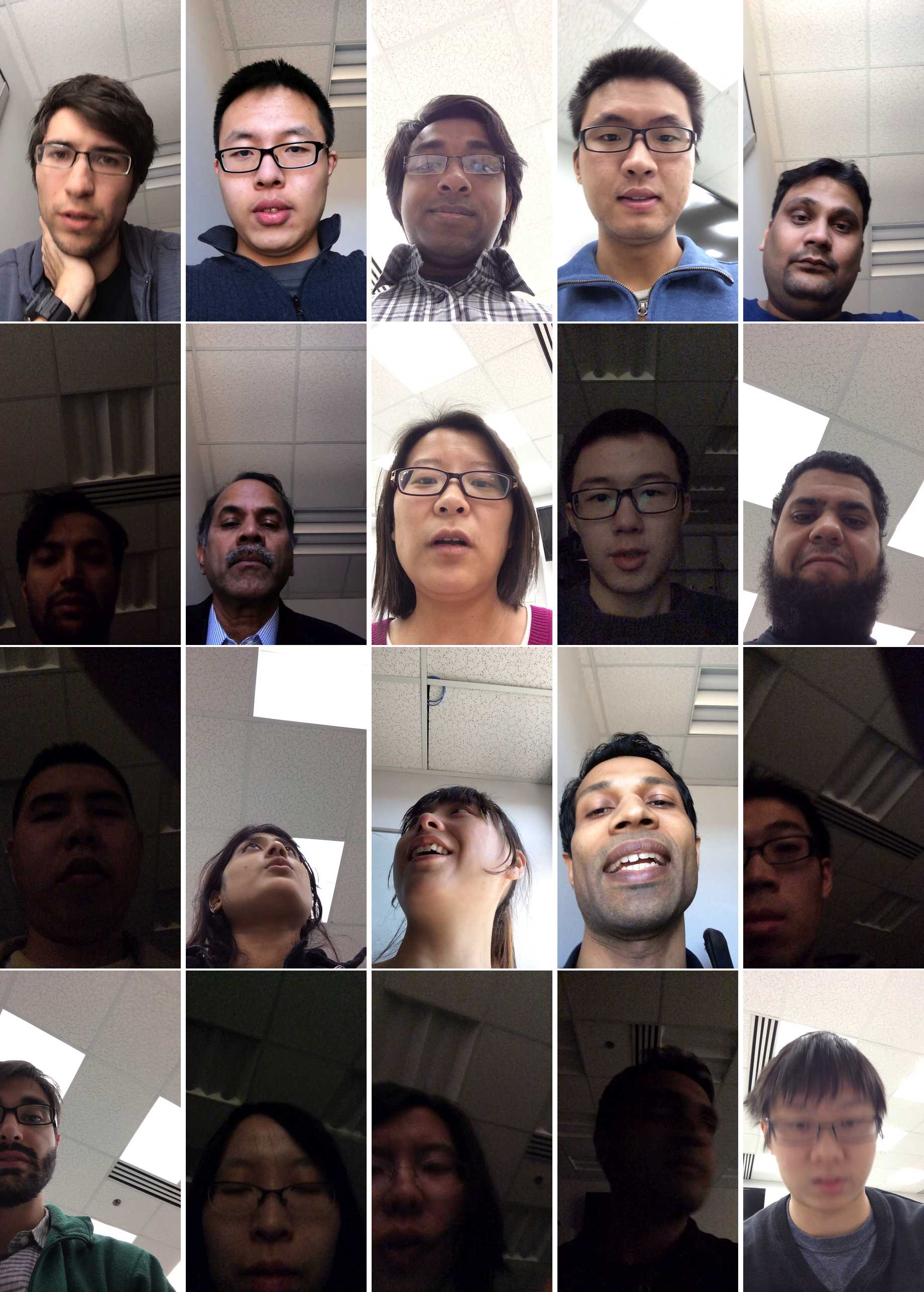

The dataset includes 750 face videos of 50 users captured while using a

smartphone by its front-facing camera. For each user, five of the videos

are taken in a well-lit room, another five are taken in the same room with

dim lighting, and the remaining five are taken in a different room with

natural daytime illumination. Each group of five videos consists of one

video for enrollment and four videos taken while the user is using a

custom-written app to perform one of four tasks.

If you want to download the dataset, please send an email to

rama (at) umiacs*umd*edu

(replace the *'s with dots) with "Mobile Face Dataset Download Request"

in the subject line. Please also include basic information about yourself,

your institute and the reason you need the dataset. You will receive the download

link in a reply to your email.

|

UMDAA-02:

The University of Maryland Active Authentication Dataset-02 (UMDAA-02) data collection drive (15 Oct. to 20 Dec., 2015) yielded 141.14 GB of smartphone sensor signals collected from 48 volunteers using Nexus 5 phones as their primary device for around one week. The data collection sensors include the front-facing camera, touchscreen, gyroscope, accelerometer, magnetometer, light sensor, GPS, Bluetooth, WiFi, proximity sensor, temperature sensor and pressure sensor. The data collection application also stored the timing of screen lock and unlock events, start and end time stamps of calls, currently running foreground application etc. The usage information is arranged in "Sessions" which starts when the user unlocks the phone and ends when the phone goes to the locked state. Here we upload 2 modalities a) front camera captures (Images are captured at 3 fps for the first 60 seconds for each session) and b) touch.

Downloads:

UMDAA-02 face dataset

UMDAA-02 Touch dynamics datsaset

|

|

Independent Moving Object Detection

Rama Chellappa, Joshua Broadwater

|

The Science of Land Target Spectral Signatures

Rama Chellappa, Joshua Broadwater

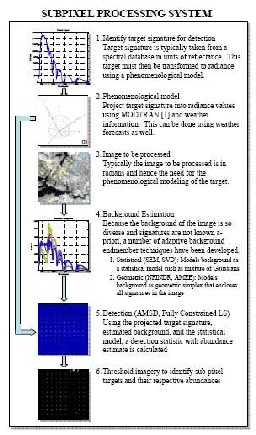

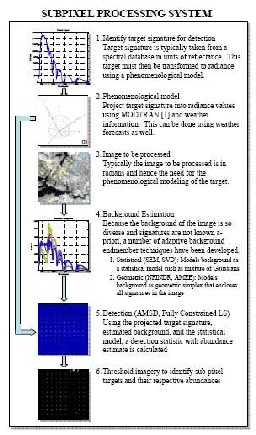

This project is a collaboration with the Georgia Institute of Technology, University of Hawaii, Rochester Institute of Technology, Clark Atlanta University, and University of Florida. The Multiple

University Research Initiative (MURI) team is focused on understanding

the physical phenomena that affect target spectral signatures such as

weathering, atmospheric effects, and camera position. Our part of the

project focuses on incorporating physical phenomena into automatic target

detection/recognition (ATD/R) algorithms for hyperspectral imagery. Our

past research has provided improved parametric detectors that incorporate

sum-to-one and non-negativity abundance constraints. Our current research

revolves around the development of physics-based kernels for

non-parametric detectors and the development of advanced adaptive

threshold techniques based on importance sampling. |

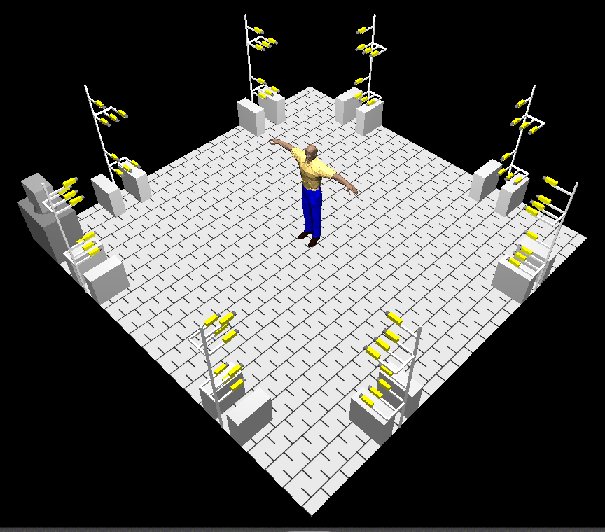

Algorithms and Architectures For Vision Based Inference From Distributed Cameras

Rama Chellappa,

Ashok Veeraraghavan,

Gaurav Aggarwal

Project page

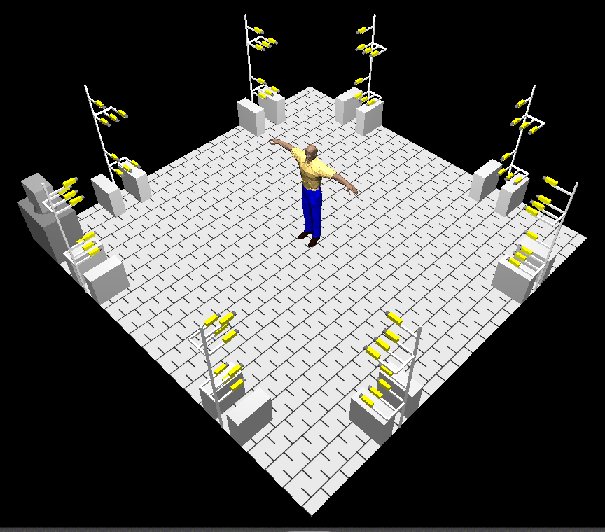

This project is a collaboration with Dr.Shuvra Bhattacharya and Dr.Wayne Wolf .This project

develops new techniques for distributed smart camera networks through an

integrated exploration of distributed algorithms, embedded architectures,

and software synthesis techniques. The objective is to build real time

systems for coordinated inference from multiple cameras. Specifically, we

are investigating a series of complex smart camera algorithms and

applications, specifically, human gesture recognition; self-calibration of

the distributed camera network; detection, tracking and fusion of

trajectories using distributed cameras; view synthesis using image based

visual hulls; gait-based human recognition; and human activity analysis.

This project is a collaboration with Dr.Shuvra Bhattacharya and Dr.Wayne Wolf .This project

develops new techniques for distributed smart camera networks through an

integrated exploration of distributed algorithms, embedded architectures,

and software synthesis techniques. The objective is to build real time

systems for coordinated inference from multiple cameras. Specifically, we

are investigating a series of complex smart camera algorithms and

applications, specifically, human gesture recognition; self-calibration of

the distributed camera network; detection, tracking and fusion of

trajectories using distributed cameras; view synthesis using image based

visual hulls; gait-based human recognition; and human activity analysis.

|

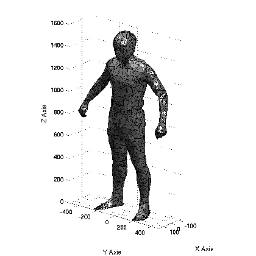

New Technology for Capture, Analysis and Visualisation of Human Movement Using Distributed Cameras

Rama Chellappa,

Aravind Sundaresan,

James Sherman

Project page

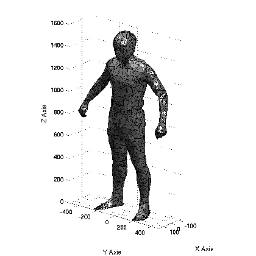

This project is a collaboration with the Biomotion Laboratory at Stanford University and the Media Research Laboratory at New York University.

The objective is to perform markerless motion capture using multiple

calibrated cameras. We use shape models such as super-quadrics to

represent the humans. Such models are essential for tracking the

articulated motion accurately.

This project is a collaboration with the Biomotion Laboratory at Stanford University and the Media Research Laboratory at New York University.

The objective is to perform markerless motion capture using multiple

calibrated cameras. We use shape models such as super-quadrics to

represent the humans. Such models are essential for tracking the

articulated motion accurately.

|

Insect Inspired Navigation of Micro Air Vehicles

Rama Chellappa,

Mahesh Ramachandran,

Kaushik Mitra,

Ashok Veeraraghavan

Project page

This

project aims at developing autonomous Micro Air Vehicles capable of

navigation and terrain understanding. The project is a collaboration

with Aerospace Engineering , Mechanical Engineering Departments at the University of Maryland and Visual Sciences Group at Australian National University. This

project aims at developing autonomous Micro Air Vehicles capable of

navigation and terrain understanding. The project is a collaboration

with Aerospace Engineering , Mechanical Engineering Departments at the University of Maryland and Visual Sciences Group at Australian National University.

Current focus is on the following issues.

- Estimating the ego-motion of Micro Air Vehicles(MAV) from a

combination of visual and inertial sensors.

- Terrain Understanding from videos acquired from MAVs.

- Simultaneous tracking and Behavior analysis of social insects.

|

Multi-sensor Multi-nodal Fusion for Audio-Video Survillence

Rama Chellappa,

Qinfen Zheng,

Aswin Sankaranaryanan,

Wu Hao,

Xue Mei,

Seong-Wook Joo

Project page

This project is a collaboration with BAE Systems and Prof. Jim McClellan of

Georgia Institute of Technology. This project addresses issues related to surveillance and outdoor scene monitoring and develops algorithms for the following.

This project is a collaboration with BAE Systems and Prof. Jim McClellan of

Georgia Institute of Technology. This project addresses issues related to surveillance and outdoor scene monitoring and develops algorithms for the following.

- Develop PDA based remote sentry devices for monitoring scene acoustic and video through secure wireless networks.

-

Multi-nodal Multi-sensor fusion for robust tracking with efficient use

of the individual modality for low power consumption and prolonged

battery life.

- Multi-camera tracking (especially for non-overlapping views).

|

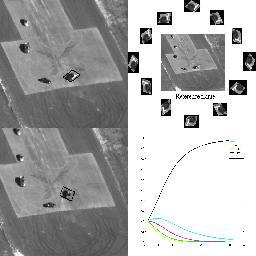

Verification and Identification for Surveillance Video

Rama Chellappa,

Zhanfeng Yue, and

Arunkumar Mohananchettiar

Project page

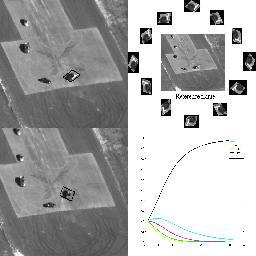

This project is a collaboration with SAIC Corporation. The objective is to

develop automatic target verification and identification for airborne

surveillance video. The following issues are addressed in the project:

Target detection from airborne surveillance video (moving platform).

Reliable target tracking. Shadow detection. A verification by synthesis

algorithm using homography and template matching. An alternative

verification system accomplishing tracking and recognition simultaneously.

This project is a collaboration with SAIC Corporation. The objective is to

develop automatic target verification and identification for airborne

surveillance video. The following issues are addressed in the project:

Target detection from airborne surveillance video (moving platform).

Reliable target tracking. Shadow detection. A verification by synthesis

algorithm using homography and template matching. An alternative

verification system accomplishing tracking and recognition simultaneously.

|

|

|